2021

Dr. Charles Isbell

John P. Imlay Jr. Dean, Georgia Institute of Technology, College of Computing

2020

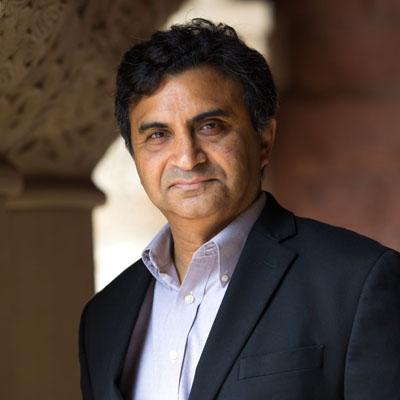

Milind Tambe, PhD

Director of Center for Research in Computation and Society & Gordon McKay Professor of Computer Science, Harvard University, and Director of AI for Social Good at Google Research, India

Roni Rosenfeld, PhD

Professor and Department Head, Machine Learning Department, School of Computer Science, Carnegie Mellon University

Shafi Goldwasser, PhD

RSA Professor of Electrical Engineering and Computer Science, MIT Professor of Mathematical Sciences, Weizmann Institute of Science Director of the Simons Institute for the Theory of Computing, UC Berkeley

2019

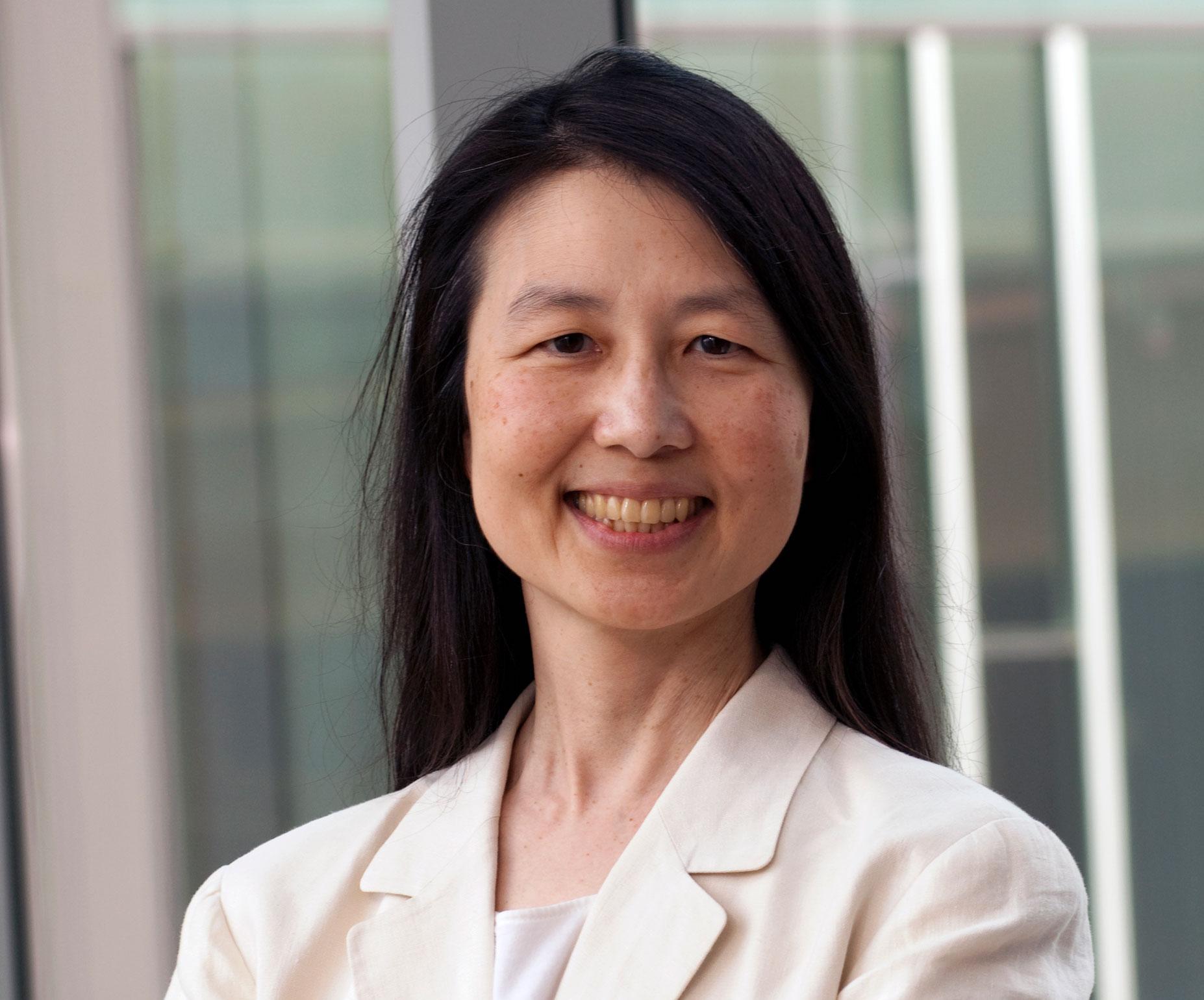

Jeannette Wing, PhD

Avanessians Director of the Data Science Institute & Professor of Computer Science, Columbia University

Michael Wooldridge, PhD

Professor of Computer Science, Head of Department of Computer Science at the University of Oxford

Francesca Rossi, PhD

AI Ethics Global Leader, Distinguished Research Staff Member, IBM Research AI