Reality check

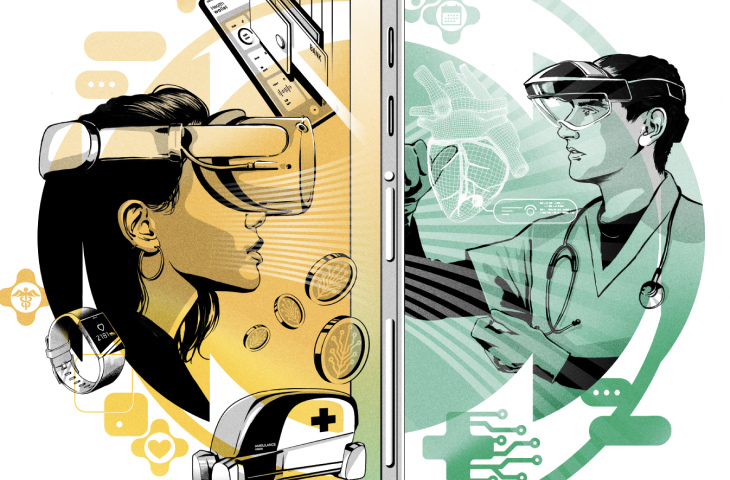

Imagine typing on your computer just by thinking. Or ordering something online without having to lift a finger. Thanks to brain-computer interfaces (BCIs), this may one day become possible. In theory, you could be sitting at home and thinking, “I’m hungry for pizza,” and a pop-up message on your smartwatch or smart glasses would present the menu from your favorite takeaway. Instead of ordering by manually scrolling down and pressing buttons, the application would read your thoughts—and in no time, your pizza would be delivered to your door.

For online businesses, forever in pursuit of seamless, frictionless customer experiences, this is a vision of nirvana. Right now, it’s pure science fiction—but how likely is it to become reality?

BCIs already exist. They work by acquiring brain signals, analyzing them, and relaying them to external devices. These use machine learning to translate those signals into machine- understandable commands, enabling humans to interact with technology with their mind. So far, BCI applications have mainly been in medicine—for example helping patients with impaired motor abilities to move robotic limbs. But they are edging towards non-clinical usage in areas such as gaming. Researchers are also exploring using thoughts to send emails and browse the web. “This is a transformational technology that will change who we are as humans,” says Rafael Yuste, a Professor of Biology at Columbia University. Indeed, this year a number of tech companies are expected to either bring their BCIs into clinical trials or release data on trials already underway.

But for all their promise, BCIs are riddled with challenges.

Until now, most BCI successes have involved the invasive form of the technology, which requires implanting electrodes directly into the brain to record its signals. Because such a procedure is medically risky and governed by strict regulations, it’s hard to imagine these sorts of BCIs becoming commercially pervasive. “It certainly inhibits broad and rapid distribution,” says Timothy Marler, a senior research engineer at the RAND Corporation. Non-invasive neurotechnologies instead record brain signals from outside the skull via a wearable headset. But, this has a drawback: the strength of the signal of interest can be lost among the brain’s background noise, which makes it difficult to detect the relevant information.

There are also issues with processing the signal, even where you can detect it. Say, for example, you want to add extra cheese to your pizza—you’d need an algorithm to decipher the specific brain patterns related to this command. The problem, explains Camille Jeunet-Kelway, a research scientist at the French National Center for Scientific Research, is that too often these algorithms are prone to error. What makes it harder is that each of us has different brain patterns, much like how our voices have different accents. These algorithms must therefore be modulated to the individual using the device. Not only that, but the user also has to learn to generate “clean” commands via their brain patterns. This can be achieved through neurofeedback training, but it takes time. “If at the origin the user does not generate a high-quality signal, then the best recording methods and algorithms will be useless,” Jeunet-Kelway says. For now, this makes a powerful one-size-fits-all technology difficult to fathom.

Against this backdrop, however, there has been progress. Firstly, work has been done to improve methods for recording brain signals through the scalp. Non-invasive BCIs typically use electroencephalogram (EEG) headsets, but the signal-to-noise ratio can be improved by amplifying the signal before it is processed.

Another option is to use magnetoencephalography (MEG) headsets, which are less sensitive to irrelevant brain signals that contaminate the command. Secondly, advances are being made in processing the signal: a recent paper explores decoding speech with a non-invasive headset using AI. “This particular paper is the first time that researchers have used advanced deep neural networks to decode, so that’s the breakthrough,” Yuste says. “I think within a decade we will see non-invasive decoding of simple commands and speech available in the consumer electronics market, which could open the door to decoding mental activity and intentions.”

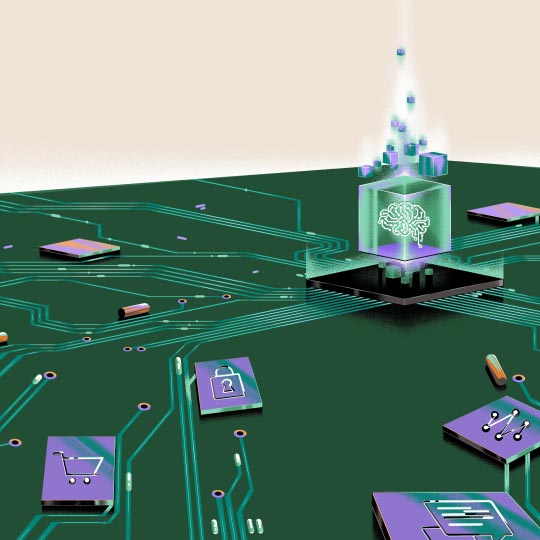

All of this has heightened awareness of the social risks surrounding BCIs, particularly concerning data privacy. The way BCIs read signals from the brain is not specific to the action, which leaves ancillary data that could detail our private thoughts, desires and emotions. “Even though all the noise might not make sense now, there’s no reason why, in a few years, a machine won’t be able to decrypt it,” says Frederic Gilbert, a neuro-ethicist at the University of Tasmania, Australia, who studies BCIs.

Yuste believes we should learn a lesson from how difficult it has proven to regulate social media after it became ubiquitous. Long before advanced applications such as ‘pay by thought’ emerge, ethical guardrails should be established. “Since we already know the negative sides of neurotechnology, we can put a protection in place now,” he says. “Let’s not wait until it’s too late.”

BY WIRED