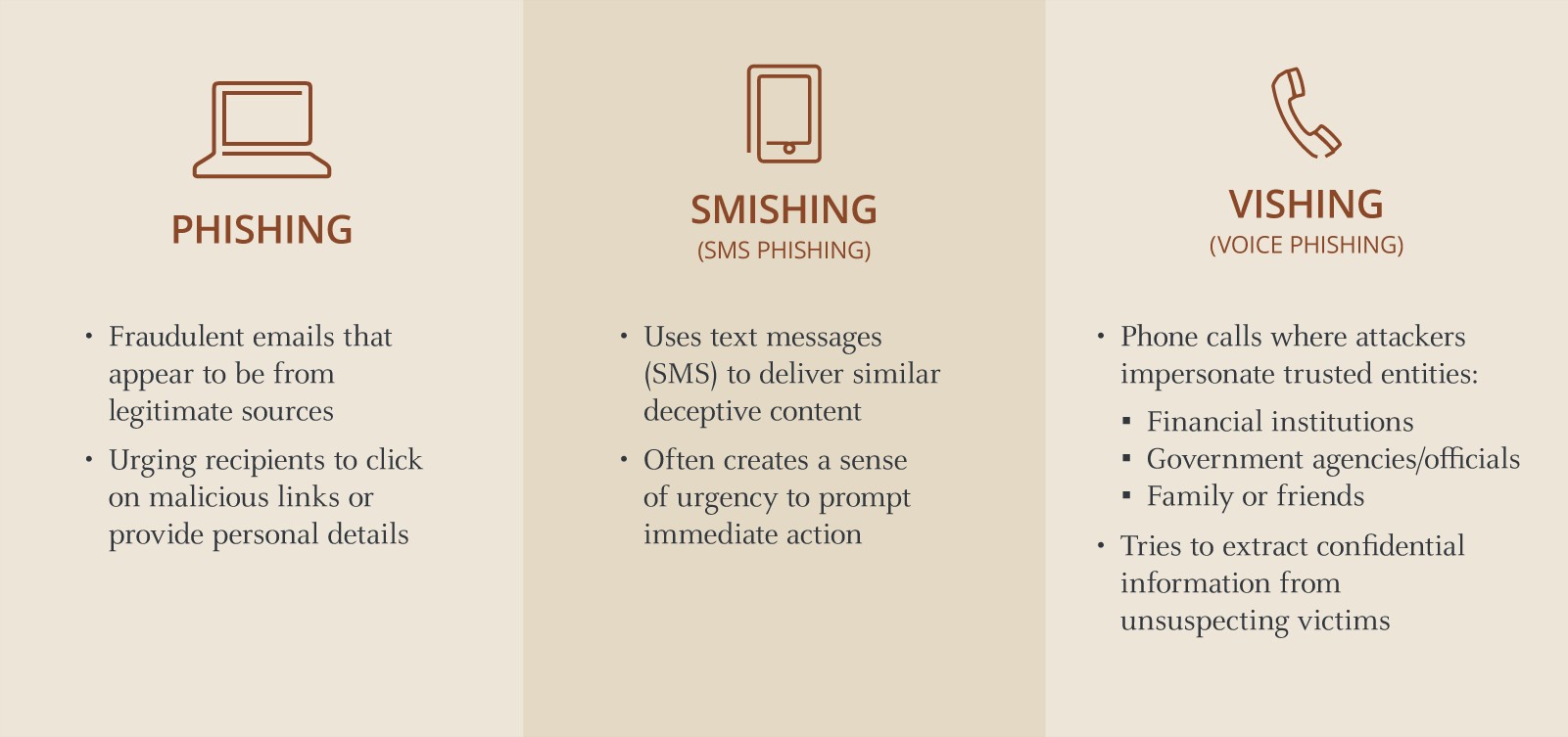

Phishing

Phishing is a cyberattack method that relies on fraudulent emails designed to look like they come from legitimate sources. These emails often impersonate trusted organizations, such as banks or popular online services, to trick recipients into believing the message is authentic. The goal is to lower the target’s guard and provoke engagement.

Smishing

Smishing, or short message service (SMS) phishing, is a technique where attackers use text messages to deliver deceptive content. These messages often appear to come from reputable companies or government agencies, making them seem trustworthy. The goal is to lure recipients into clicking on malicious links or sharing personal information.

Vishing

Vishing, or voice phishing, involves attackers making phone calls or voice messages to impersonate trusted entities such as financial institutions, government agencies or even family and friends. The caller’s objective is to gain the victim’s trust and extract confidential information, such as account numbers, password or Social Security numbers.

Examples of phishing, smishing scams

Consider the case of Jane, a successful entrepreneur who fell victim to a cleverly crafted phishing scam. An email, seemingly from her bank, requested urgent verification of her account details. Trusting the familiar branding and professional tone, Jane complied, only to find her accounts and her business compromised.

Let’s look at John, a tech-savvy individual who received a text one evening claiming to be from his bank, warning of suspicious account activity. Urged to click a link to verify his identity, he did so, entering his login details on a seemingly legitimate site.

The next day, John found unauthorized transactions in his account. Realizing he had been scammed, John contacted his bank, which flagged his accounts and worked to recover the funds. The emotional impact, however, was significant. Determined to learn from this, John shared his story with friends and family, stressing the importance of verifying messages.

In another notable case, a firm's CEO was targeted by cybercriminals using deepfake audio and video to mimic the voice and likeness of the CEO, instructing them to wire a significant amount of money to a fraudulent account. The voice and likeness were convincing enough that the CEO complied, believing he was following legitimate instructions.

Let’s review some things these individuals could have done to protect themselves.

Strategies to outsmart social engineering attempts

- Stay skeptical: Always question unexpected communications, whether they come via email, text or phone call. If something seems off or too good to be true, it probably is.

- Verify the source: Before clicking on links or providing information, verify the sender's identity. For emails, check the sender's address for inconsistencies. For texts and calls, contact the organization directly using official contact information.

- Look for red flags: Be wary of messages that create a sense of urgency, request personal information, or contain spelling and grammatical errors. These are common signs of phishing, smishing and vishing attempts.

- Do not share personal information: Avoid sharing sensitive information over email, text or phone unless you are certain of the recipient's identity and the necessity of the request.

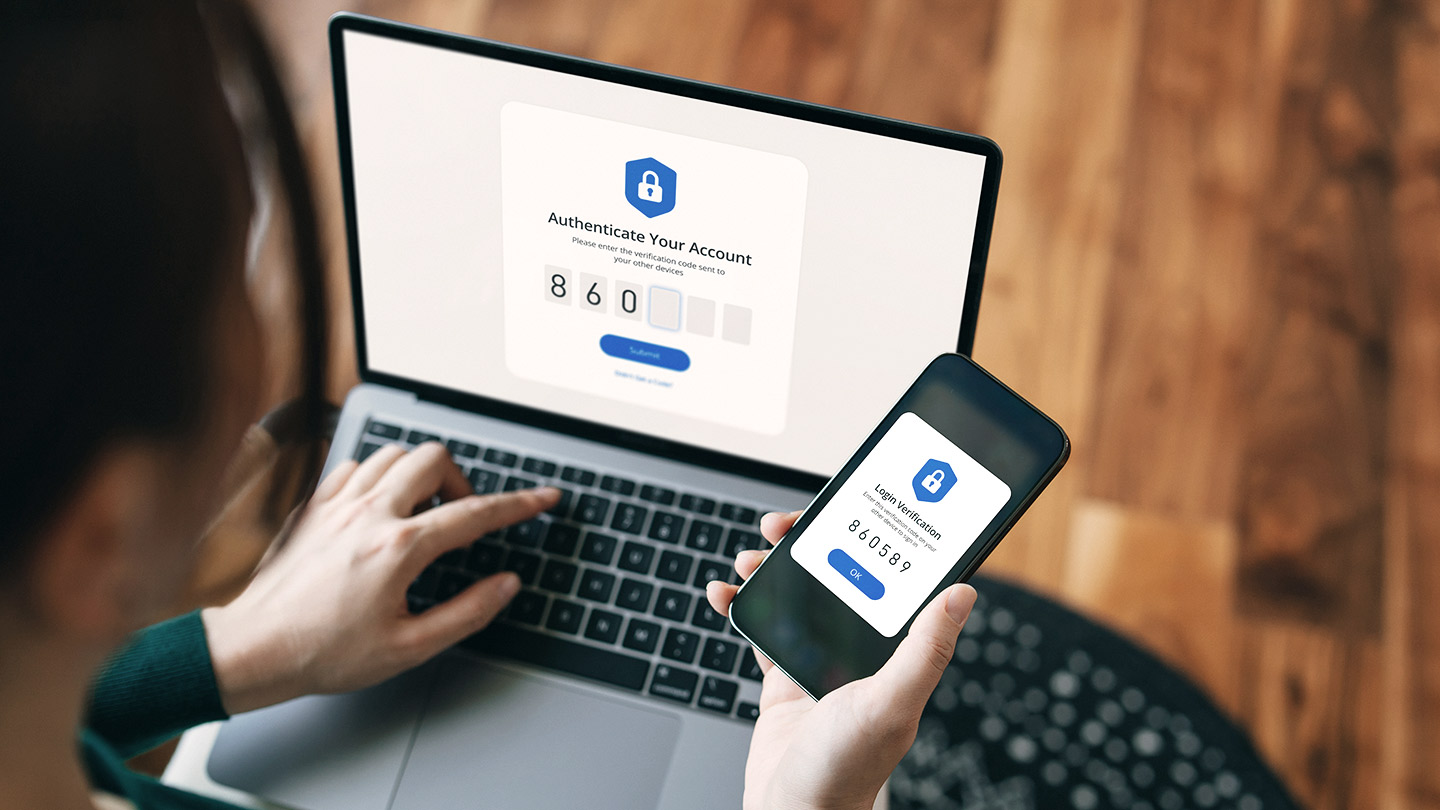

- Use Multi-Factor Authentication (MFA): Enable MFA on your accounts to add an extra layer of security. This requires a second form of verification, such as a text message code or authentication app, making it harder for attackers to gain access.

- Use strong, unique passwords: Create complex passwords for each of your accounts and change them regularly. Consider using a password manager to keep track of them securely.

- Update software and use anti-virus software: Regularly update your devices, operating systems, browsers and security software to protect against the latest threats. Use antivirus software on all devices to detect and remove any malicious software that may have been installed.

By understanding the mechanics of social engineering and implementing these protective measures, individuals and organizations can significantly reduce the risk of falling victim to these tactics.

Although artificial intelligence (AI) is enhancing efficiency and innovation across various industries, enabling smarter decision-making, personalized experiences and improved problem-solving capabilities, cybercriminals are leveraging AI to exploit individuals and organizations through advanced social engineering attempts, such as AI and even deepfakes.

Deepfakes are synthetic media created using AI to alter or fabricate audio, video or images, making it appear as though someone said or did something they did not. This poses a great threat, making it significantly difficult for individuals or organizations to distinguish between genuine and fabricated content.

Cybercriminals use AI and deepfakes to profile and target individuals and organizations. For example, they can use AI to:

- Create fake identities to commit identity theft or unauthorized access to sensitive information: Cybercriminals leverage AI to generate realistic images and videos of non-existent people to create fake social media profiles or impersonate real individuals.

- Manipulate audio and video to spread misinformation, damage reputations or manipulate public opinion: Cybercriminals use deepfakes to alter existing audio and video to make it appear as though someone said or did something they did not.

- Commit social engineering attacks and scams: Cybercriminals use AI-generated content in phishing, smishing and vishing attacks to create more convincing emails, messages or calls that appear to come from trusted sources to deceive individuals into falling victim to potential scams.

As AI-driven threats and scams are on the rise, it is crucial for individuals and organizations to implement essential cybersecurity protections to counter these ever-evolving threats and to stay a step ahead of cybercriminals.

Here are more ways to remain vigilant in the face of a possible cyber attack.

- Verify content authenticity of audio, video and images, especially if they involve sensitive information or requests. Pause before taking action, as deepfakes and other impersonations may be difficult to detect – take a moment to review the legitimacy. Do not assume a request is genuine just because the requester knows information about you, your family or your company.

- Verify instructions through multiple communication channels and trusted methods, such as a direct phone call or face-to-face meeting with the person making the request, before acting on any financial instructions.

- Establish a standard verification protocol for verifying unusual or high-value requests, which could include requiring written confirmation or approval from multiple senior executives.

- Establish a unique safe word or phrase for verification that is known only to the parties involved in sensitive communications. This safe word or phrase can be used to verify the authenticity of requests or instructions, especially in situations where deepfake technology might be used to impersonate someone. If the safe word or phrase is not used or is incorrect, it can serve as a red flag to question the legitimacy of the communication.

- Enable Multi-Factor Authentication (MFA) for financial transactions to ensure that additional verification steps are required before any transfer is authorized, making it significantly harder for cybercriminals to access your information.

- Limit access to sensitive information and transaction capabilities to a limited number of trusted individuals within the firm.

To best protect your information, you should reduce your digital footprint to protect against cybercriminals who may use your information online to create convincing deepfakes or targeted social engineering scams. Sharing personal details online poses substantial risks, especially with AI tools that can exploit this data for cyber-attacks.

Example of an AI-engineered scam

An AI-driven scammer analyzed Julie’s online footprint, including her work details and industry-specific jargon, to create a highly personalized phishing email, leading to a cyber-attack on her company. The AI-generated phishing email appeared to be from a colleague and referenced specific projects, convincing Julie of its authenticity. When Julie clicked on the malicious link within the phishing email, it compromised her company's sensitive data, providing the scammer access to internal systems and leading to a larger cyber-attack that caused financial and reputational damage to the company.

Given the highly convincing and sophisticated nature of AI-driven threats and scams, it is essential for individuals and organizations to adopt fundamental yet vital cybersecurity measures to counter these ever-evolving threats.

Let’s review what Julie should have done before clicking on the phishing email.

- Recognize advanced social engineering warning signs: With AI and deepfake technologies, cybercriminals are creating realistic content that mimics trusted sources, making scams appear more authentic and convincing than ever before. It is crucial to verify the authenticity of any unsolicited communications in an alternative way.

- Do not put personal information into AI tools, as they are capturing and potentially sharing your data with the model, posing privacy and security risks. This can lead to unauthorized access, identity theft and loss of control over how your information is used.

- Stay informed of the latest developments in AI and deepfake technology, as well as emerging social engineering threats and scams.

- Regularly educate family members, employees, colleagues, etc. about threats in the cybersecurity landscape, especially the risks associated with deepfakes and AI scams, emphasizing the importance of skepticism and verification.

By fostering a culture of awareness and vigilance, it is a crucial step in defending against social engineering attacks. By recognizing the signs and implementing robust security measures, individuals and organizations can better protect themselves from the ever-evolving threat landscape.

Most importantly, always question unexpected communications, whether they come via email, text or phone call. Even if a communication appears to be from a trusted source, ensure you verify the legitimacy in an alternative way.

Your J.P. Morgan advisor can provide practical guidance and resources on how to protect you, your family and your business.